In the rapidly evolving landscape of artificial intelligence, Google’s AI-powered search overviews have emerged as one of the most transformative additions to online information retrieval. The feature was initially introduced as a way to make search faster, smarter, and more intuitive, enabling users to receive concise, AI-generated answers rather than endlessly scrolling through pages of links. For many, it promised an era of convenience and efficiency. Yet despite the progress Google has made in improving this technology since its problematic debut, recent reports reveal that the system remains dangerously unreliable. Users are now finding themselves directed not only to inaccurate information but also to sophisticated scams that risk financial loss and stolen personal data.

The troubling development highlights the darker side of AI integration in consumer tools. What was once celebrated as a bold leap forward is now raising pressing concerns about digital safety. The AI overviews have become a double-edged sword: offering speed but compromising accuracy, leaving individuals vulnerable to fraudsters who exploit AI-generated results. As technology advances, so too have scams become more convincing, and the recent cases tied to Google’s AI feature illustrate just how perilous this new frontier can be for everyday users.

The Evolution of Google’s AI Overviews and Their Persistent Flaws

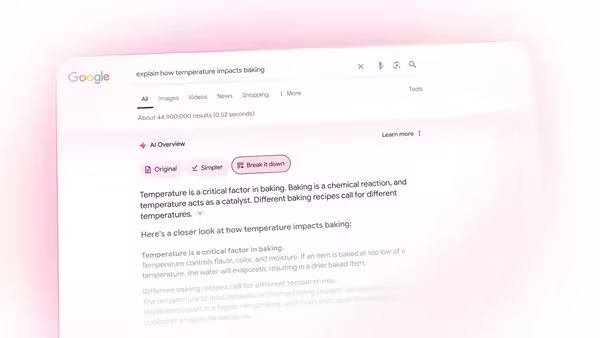

When Google first rolled out its AI overviews, the feature quickly gained attention for its novel ability to summarize complex information. But it was not without its flaws. In its earliest days, users mocked the system after it bizarrely suggested adding glue to pizza or eating rocks, errors that underscored the risks of relying entirely on machine-generated outputs. Over time, Google worked to fine-tune the technology, positioning it as a core element of its vision for the future of search. With growing user adoption, AI overviews were integrated more deeply into Google Search, even accompanied by a dedicated AI mode designed to enhance browsing experiences.

Despite these advancements, the system has repeatedly demonstrated its fallibility. Reports from credible outlets, such as Digital Trends, have documented how users attempting to locate official customer service numbers through AI overviews were redirected to fraudulent contacts run by scammers. The implications of such failures are severe: rather than simply being misinformed, unsuspecting users risk handing over sensitive personal details, credit card numbers, or even falling into elaborate financial traps.

The case of business owner Alex Rivlin exemplifies these dangers vividly. Rivlin, who considered himself cautious and vigilant online, shared his ordeal in a widely circulated Facebook post. He emphasized that he had always avoided clicking suspicious links, never disclosed personal information over the phone, and routinely verified the legitimacy of contacts. Yet, when he relied on Google’s AI overview to locate the customer care number for Royal Caribbean, he was presented with a fake number. The scammer on the other end managed to coax him into sharing his credit card details before he realized he was being deceived. Rivlin narrowly avoided greater financial loss, but his experience serves as a stark reminder that even careful individuals can fall victim to AI-enabled scams.

This was not an isolated event. Multiple users have shared similar experiences, pointing to a troubling pattern rather than a one-off glitch. On Reddit, a user identified as Stimy3901 recounted how searching for help with correcting a misspelled name on a Southwest Airlines ticket led to an AI-generated scam number. The fraudulent operators behind the number demanded hundreds of dollars in exchange for fixing the booking, a service that should ordinarily be free or handled directly by the airline. Another Reddit user, ScotiaMinotia, revealed that their attempt to find British Airways customer support through Google’s AI overview led to a scam number as well. Although this particular error was corrected later, the fact that such vulnerabilities exist at all underscores the fragility of AI-generated content in areas where accuracy is paramount.

Why AI-Generated Results Are a Breeding Ground for Scammers

The core issue lies not simply in the occasional error of AI but in how scammers exploit its very architecture. AI overviews work by pulling information from a wide range of sources across the internet, attempting to generate the most relevant summary. But when fraudulent actors manipulate or spoof these data points, the AI can easily misinterpret or surface deceptive content as legitimate. Unlike traditional search results that display a range of links for the user to evaluate, AI overviews condense everything into a single, authoritative-looking answer. That presentation creates a false sense of security.

For scammers, this is a golden opportunity. They understand that users often trust results from Google, assuming that anything displayed prominently must be vetted and safe. By seeding the internet with fake customer service numbers and strategically crafted content, fraudsters increase the chances that AI systems will pick up their false information. Once surfaced through AI overviews, these numbers appear official, leading unsuspecting users directly into their traps.

The sophistication of these scams cannot be overstated. Victims report that the fake representatives sound professional, have detailed knowledge about the companies they claim to represent, and use convincing language to extract sensitive information. In Rivlin’s case, he initially believed he was speaking to a legitimate customer care agent, only realizing the deception after he had already shared his financial details. The scams are designed not to raise suspicion until it is too late, making them more dangerous than the obvious phishing emails or poorly written scam messages of the past.

Moreover, the reliance on AI tools has amplified this problem in a way that traditional search results never did. In the past, when users looked for customer support numbers, they could cross-check different websites, verify through official pages, or spot discrepancies in search results. With AI overviews providing a single, synthesized response, that layer of cross-verification is often eliminated. Users, eager for quick solutions, are more likely to dial the first number they see, assuming it to be correct.

The rise of such incidents raises urgent questions about responsibility in the AI era. While Google continues to refine its AI systems, critics argue that the company has not invested enough in safeguarding users from malicious actors. For consumers, the lesson is clear: even the most advanced AI technologies can be manipulated, and blind trust in machine-generated answers carries risks. The intersection of convenience and vulnerability has rarely been so starkly illustrated.

The post Google’s AI overviews under fire: How search results are misleading users into scams and fraudulent numbers | cliQ Latest appeared first on CliQ INDIA.

-

Ajit Agarkar Reveals Jasprit Bumrah's Roadmap After Asia Cup 2025

-

Breaking! BCCI Announces Team India's Squad For ICC Women's World Cup 2025, Harmanpreet Kaur Set To Lead; Shafali Verma Has Been Left Out

-

'Usko Lene Ke Liye Aur Kya Karna Padega?': Netizens In Disbelief As Shreyas Iyer Misses Out On Team India Squad For Asia Cup 2025

-

Coolie vs Saiyaara: Rajinikanth and Lokesh Kanagaraj top Popular Indian Celebrities list on IMDb, dethrone Ahaan Panday and Aneet Padda

-

Deepika Padukone gears up for 100-day shoot in Allu Arjun, Atlee’s AA22 x A6, scheduled to commence in…