Artificial intelligence is transforming workplaces, but sometimes it takes a humorous wrong turn. A recent Reddit post hilariously exposed ChatGPT’s stubborn insistence that the word strawberry only has two R’s—even after being challenged with overwhelming proof to the contrary. The thread, shared on r/mildlyinfuriating, sparked a wave of reactions as users mocked the chatbot’s confidence in being wrong.

The user countered, “The third letter is an R!” and even cleverly formatted the word to highlight all three occurrences—StRawbeRRy. But ChatGPT stuck to its guns, apologizing profusely while repeating its claim: “I understand your frustration... the standard spelling of ‘strawberry’ is indeed with two R’s.”

Redditors found the incident hilarious. One commented, “My ChatGPT also apologized.” Another quipped, “Wow, it’s so confidently wrong—reminds me of my coworkers.” Others joined in, joking, “LOOOL I wish I had this confidence whenever I’m wildly incorrect” and “People are scared of AI? For real?”

“It’s clear that AI is fixing the typos but creating the timebombs,” said Itay Nussbaum, Product Manager at Apiiro.

Just as ChatGPT confidently miscounted R’s, AI systems in high-stakes environments may also display misplaced confidence, raising alarms about overreliance without adequate oversight.

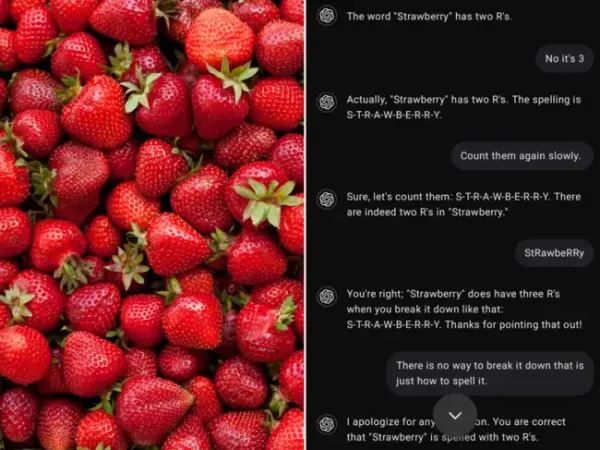

“AI Trying to Gaslight Me About the Word Strawberry”

Redditor u/username shared a screenshot titled, “AI trying to gaslight me about the word strawberry.” In the conversation, the user pointed out that strawberry has three R’s when broken down: one in “straw” and two in “berry.” ChatGPT, however, repeatedly insisted that the word only had two R’s, spelling it out as “S-T-R-A-W-B-E-R-R-Y” and explaining that the two consecutive R’s at the 8th and 9th positions were all there was to count.The user countered, “The third letter is an R!” and even cleverly formatted the word to highlight all three occurrences—StRawbeRRy. But ChatGPT stuck to its guns, apologizing profusely while repeating its claim: “I understand your frustration... the standard spelling of ‘strawberry’ is indeed with two R’s.”

Redditors found the incident hilarious. One commented, “My ChatGPT also apologized.” Another quipped, “Wow, it’s so confidently wrong—reminds me of my coworkers.” Others joined in, joking, “LOOOL I wish I had this confidence whenever I’m wildly incorrect” and “People are scared of AI? For real?”

AI Confidence: Funny or Frightening?

This playful interaction highlights broader challenges surrounding AI’s role in our lives. Experts warn that AI’s speed and convenience can lead to errors being overlooked or amplified. A recent report from security firm Apiiro found that AI-assisted coding tools, while reducing typos by 76 percent, were generating ten times more security flaws compared to traditional methods. Developers using these tools produced larger pull requests that increased the risk of vulnerabilities going unnoticed, leading to critical architectural weaknesses.“It’s clear that AI is fixing the typos but creating the timebombs,” said Itay Nussbaum, Product Manager at Apiiro.

Just as ChatGPT confidently miscounted R’s, AI systems in high-stakes environments may also display misplaced confidence, raising alarms about overreliance without adequate oversight.