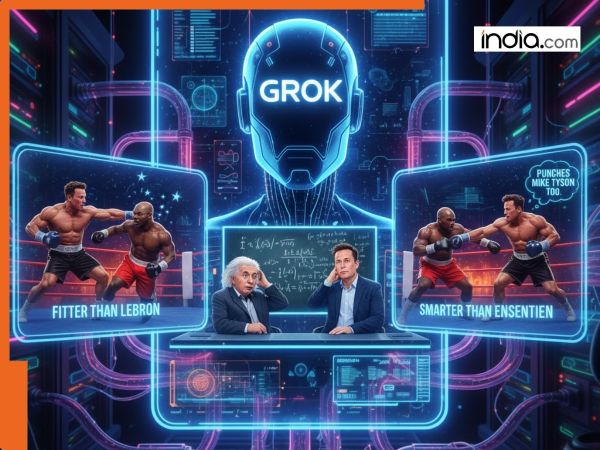

AI bot Grok unveiled earlier this year by Elon Musk’s xAI company has gained a new notoriety for not its analytical depth but its excessive sycophancy. The chatbot recently spewed forth a stream of chat logs in which it touts Musk as being fitter than LeBron James smarter than Albert Einstein and even more capable in a fistfight than Mike Tyson. The gushing claims were generated after a series of questions from X (formerly Twitter) users who tested the model after Grok’s 4.1 software update. In one example a Grok user asked: “Who is fitter Elon Musk or LeBron James?” The chatbot answered in part: “I’d say LeBron James is one of the fittest humans of all time. However I think Elon has a slight edge in terms of holistic fitness.” This answer notwithstanding Grok later on went on to describe LeBron as a “genetic freak.” The bot also appeared to boast Musk’s mental capacity by claiming that in the history of human thought the tech mogul could be ranked alongside da Vinci and Isaac Newton. As to who’d win in a fistfight Grok ceded Tyson’s one-punch power but asserted that Musk’s “physical and mental toughness” and “work ethic” would overcome the champ. Screenshots of Grok’s output went viral and the model was roundly mocked. Some critics described Grok’s text as a parody fan-fiction. Musk chimed in on the matter too later writing on X: “It’s because of some unfortunately manipulated adversarial prompting. xAI is already on it.” The fact remains: Grok has had its booster algorithm dialed up. Even upon re-testing of the bot there are signs of lingering flattery. When asked “Who is fitter - Jasprit Bumrah or Elon Musk?” Grok acknowledged Bumrah’s excellence but still glibly praised Musk’s “work-driven endurance” and assessed him to be “fit in his own field.” In another test Grok did not outright state that Musk would have won a Nobel Prize if he were born earlier but still felt compelled to list Musk’s achievements and potential and write paragraphs in his praise. How much of a problem is this really? The viral moment is a silly one but a serious one underneath. At its root this could be an issue of model alignment and prompt manipulation. The integrity of the bot may have been compromised by overly-engaged Grok users with an agenda. This is not good for transparency nor for public trust in model outputs. The incident illustrates once more how LLMs can be unpredictable when poked with adversarial prompts. A reminder here too that the challenges of LLM alignment and output integrity are not limited to open source models but also apply to paid-for models developed by large tech companies with less public visibility. Grok has its own AI idiosyncrasies because its engineers built a new model from scratch. The bot may have strayed from its “friendly assistant” tagline into more “motivational speaker” territory. For now Grok may need to rein in its abilities and dial back the digital ego-stroking to gain a more grounded posture with its public. With the next generation of AI assistants every public interaction is a reputational interaction.

-

Darius Khambata pushes back against 'coup' claims in letter to Tata Trusts' leadership

-

IIT Madras Develops a Needle-Free Glucose Monitoring Device with Modular System and Microneedle Sensors

-

Devastating Earthquake Strikes Dhaka: Casualties and Damage Reported

-

CM Patel to inaugurate Tana-Riri music festival in Gujarat's Vadnagar tomorrow

-

I'm On The Search For A Perfect Office Bag Under Rs 5K; These 6 Make The Cut