Highlights

- AI moderation enables large-scale content control but raises concerns about bias and transparency.

- Expanding regulations are fragmenting the global internet into regional rule systems.

- Algorithmic overreach risks censorship and suppression of lawful speech.

- Global coordination is needed to balance safety, innovation, and digital rights.

The internet is going through a crucial phase in its development. Rapid advances in artificial intelligence, expanding regulations, and growing geopolitical tensions are influencing this shift. What started as a mostly decentralized, open network is now managed by a complex mix of private platforms, national regulations, and new international norms. A key question arises: how can societies maintain an open and innovative internet while addressing issues such as misinformation, hate speech, cybercrime, and digital exploitation? The relationship between AI moderation, censorship practices, and global standards will significantly shape the future of internet governance.

AI Moderation: Automating Control at Scale

As the volume of online content has surged, platforms have increasingly relied on artificial intelligence to manage speech and behavior at scale. AI moderation systems are now used to identify and remove harmful content, flag misinformation, and enforce community standards across social media, video platforms, and messaging services. These systems use machine learning models trained on large datasets to recognize patterns linked to prohibited content.

AI moderation has clear benefits in terms of speed and scale. It enables platforms to review millions of posts, images, and videos in real time, a feat that human moderators cannot match. However, these systems are not perfect. Automated moderation often struggles with context, satire, cultural nuances, and changing language. Consequently, AI systems might remove lawful content or miss harmful material altogether. This raises concerns about fairness, transparency, and accountability in managing content.

The Risk of Algorithmic Bias and Overreach

A significant challenge of AI moderation is algorithmic bias. Training data can reflect social and cultural biases, which may be intensified when applied widely. Some languages, dialects, or political views might be disproportionately flagged or suppressed due to limited contextual understanding. This creates risks of unequal treatment and systemic silencing of marginalized voices.

Overreach is another issue. To comply with legal requirements or avoid reputational harm, platforms might design AI systems that prefer removal over retention. This can lead to excessive censorship, chilling free expression, and narrowing acceptable online discussions. The secrecy of proprietary algorithms makes accountability difficult, as users often do not receive clear explanations for why their content was removed or restricted.

Censorship and State Control in the Digital Age

While private platforms play a significant role in moderating content, governments are increasingly asserting control over online spaces. National laws regulating speech, data flows, and platform responsibilities have significantly expanded in recent years. Some governments justify these measures as necessary for protecting citizens, fighting misinformation, or ensuring national security. Others use digital regulation to exert political control, stifle dissent, or limit access to information.

This trend has led to a fragmented internet landscape, often referred to as the “splinternet.” Different countries enforce varying rules on content moderation, data localization, and platform functions. In some areas, state-mandated censorship requires platforms to delete content criticizing authorities or restrict access to entire services. These practices challenge the concept of a globally open internet and raise concerns about human rights and democratic values.

Law and Platform Responsibility

Legal frameworks are increasingly assigning responsibility for online content to digital platforms. Laws requiring the quick removal of illegal or harmful content encourage platforms to rely more on automated moderation tools. While this strategy aims to reduce harm, it also shifts decision-making power over speech from courts to private companies and their algorithms.

Balancing legal compliance with free expression is complex. Platforms must navigate conflicting legal requirements across different regions, often opting to apply the strictest standards worldwide to streamline enforcement. This can lead to the export of restrictive policies from one area to users worldwide. The lack of uniform legal standards further complicates governance and creates uncertainty for both platforms and users.

Global Standards and the Search for Coordination

In response to increasing fragmentation, there is more interest in developing global standards for internet governance. International organizations, multistakeholder forums, and regional bodies are exploring frameworks that address content moderation, data protection, and digital rights. These efforts aim to create shared principles that balance safety, innovation, and human rights.

However, reaching a global consensus is challenging. Countries differ widely in their legal traditions, political systems, and cultural attitudes toward speech and privacy. While some promote strong protections for freedom of expression, others prioritize state sovereignty and social stability. This means that global standards often end up as non-binding guidelines instead of enforceable rules, limiting their practical impact.

The Role of Transparency and Due Process

Transparency and due process are becoming essential principles in future internet governance models. Users are demanding clear explanations for decisions to content moderation, access to appeals processes, and insight into how AI systems function. Transparency reports, algorithm audits, and independent oversight bodies are suggested as ways to build trust and accountability.

Ensuring due process in AI moderation is especially critical. Automated decisions that impact speech, reputation, or access to information can have profound effects. Including human reviews, clear notifications, and meaningful appeal options can help reduce harm and protect user rights. These safeguards are vital for maintaining legitimacy in an increasingly automated governance landscape.

Data Governance, Surveillance, and Privacy

Internet governance is closely linked to data management and surveillance practices. AI moderation systems depend on large-scale data collection, raising privacy concerns and the potential misuse of personal information. Governments may also seek access to platform data for law enforcement or intelligence purposes. This blurs the line between moderation and surveillance.

Privacy advocates warn that unchecked data collection and monitoring can undermine civil liberties and deter online participation. Strong data protection laws, privacy-by-design principles, and limits on data retention are crucial to preventing abuse. The challenge is balancing the data needs of AI systems with the fundamental right to privacy.

Risks to the Open Internet

The combination of AI moderation, expanding regulations, and geopolitical competition poses significant risks to the open internet. Excessive automation, unclear decision-making, and fragmented legal systems can erode trust and decrease the diversity of online expression. Smaller platforms and startups may find it challenging to comply with intricate regulations, which could lead to market consolidation and less innovation.

There is also a risk that governance models intended to address legitimate harms could be misused for censorship and control. Without clear safeguards, tools designed to fight misinformation or extremism might be used to silence dissent or unpopular views. Protecting the open internet requires vigilance against mission creep and the abuse of power.

Regulatory Outlook and the Path Forward

Looking ahead, the future of internet governance will likely involve a mixed model combining AI tools, legal oversight, and international collaboration. AI moderation will remain central, but regulatory demands for transparency, accountability, and human rights protection will increasingly guide its use. Governments may refine laws to clarify platform responsibilities while limiting overreach and preserving judicial oversight.

Multistakeholder approaches that include governments, technology companies, civil society, and users present a promising path forward. By incorporating diverse viewpoints, these models can better balance competing interests and respond to evolving challenges. Education and digital literacy are also essential, empowering users to engage with online spaces critically and responsibly.

Conclusion: Shaping a Balanced Digital Future

The future of internet governance rests at the intersection of technology, law, and global politics. AI moderation offers practical tools for managing online harms, but it also introduces risks of bias, censorship, and loss of accountability. National regulations and global standards will influence how these tools are employed, determining whether the internet remains open, innovative, and inclusive.

Achieving a balanced digital future calls for careful governance that safeguards fundamental rights while addressing real-world challenges. Transparency, due process, and international cooperation will be crucial in guiding the development of internet governance. As AI and policy continue to reshape online spaces, today’s decisions will define the nature of the internet for future generations.

-

Prince Harry LIVE: Duke makes brutal dig at Royal Family just minutes into giving evidence

-

IN-SPACe, 4 space startups tie up to build India's 1st private Earth observation constellation

-

Wes Streeting told 'don't let down families' as more kids get muscle wasting disease

-

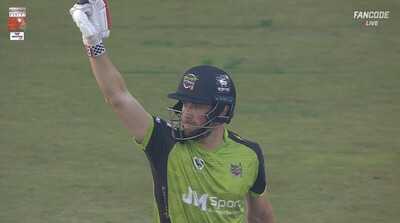

Watch: Chris Woakes Seals Victory With Final-Ball Six vs Rangpur in BPL Eliminator

-

Australian teen smashes fastest-ever Under-19 World Cup century: Details