Highlights

- Google AI Mode transforms Google Search into a conversational, multimodal search engine powered by Gemini 2.5.

- FastSearch and RankEmbed signals shift SEO focus from keyword ranking to semantic relevance.

- Publishers face rising zero-click searches, urging a pivot to LLM-optimized, multimedia-rich content.

Google Ai Mode search tool represents what the latest and most powerful artificial intelligence can do for its users. The AI mode was launched as an experiment at Google Labs in March 2025 and went live the next month. From the point of its launch, AI mode has been expanding rapidly and has brought with it a sophisticated tool that transforms a “search engine” into an “answer engine.”

The AI mode has helped evolve the search engine, enabling it to incorporate conversational and multimodal capabilities that enhance the search experience for its user base.

Global Expansion and Deeper Conversation

AI Mode has currently been made available in more than 35 additional languages and in over 40 new countries and territories, allowing it to be accessed in around 200 countries. This major global breakthrough is made possible by the new Gemini model for Search, which has been specifically designed to understand the subtleties of local languages and thus make the Search experience genuinely helpful and relevant.

The adoption of AI Mode suggests users are embracing this new method of querying: people are asking questions nearly three times longer than traditional searches and diving deeper into complex topics. For instance, India, a global leader in voice and visual search, is introducing AI Mode in seven new Indian languages, including Bengali, Tamil, and Urdu.

Moreover, the Search Live feature was recently introduced in India, making it the first country, apart from the United States, where the new AI Mode technology has been rolled out, enabling conversational computing and language processing based on the human voice.

Multimodal Capabilities and New Use Cases

A key feature distinguishing AI Mode is its multimodality, which enables the processing and generation of content beyond standard text, such as images and video. Although AI Mode initially launched as a primarily text-based tool in the U.S., Google is integrating visual results, recognizing that some queries are poorly suited for text-only answers.

If users seek inspiration or shopping assistance, AI Mode can now generate results in the form of images. For instance, a user seeking decor inspiration could ask, “Can you show me the latest trending casual shoe trend?” and receive a series of visual results. These visual answers leverage a combination of the Gemini 2.5 AI model, Google Search, Lens, and Image search capabilities.

The Technology Underpinning AI Ranking

AI Mode uses a proprietary technology called FastSearch to rank pages. Unlike the regular organic search algorithm, FastSearch is designed to rapidly generate concise search results that ground the LLMs (Large Language Models) in factual information from the web. FastSearch is based on RankEmbed signals, a deep learning system that utilizes search logs and human-rated scores to develop ranking- signals.

While Google affirms that standard SEO practices are necessary to ensure a page is crawled, indexed, and eligible to appear in AI Mode, the actual ranking process relies on FastSearch, which uses different considerations. Experts argue that the goal of content has shifted: it doesn’t need to rank in the traditional sense but must be “retrieved, understood, and assembled into an answer” by GenAI systems. This means topical relevance and semantic meaning carry more weight than keyword relevance.

Impact on Publishers and Content Strategy

The transformation of Google Search poses significant challenges, particularly for publishers. The shift toward AI-generated summaries and the use of the query fan-out technique (which anticipates and answers follow-up queries immediately) results in a higher number of “zero-click” searches.

In Australia, according to data provided by James Purtill, there are steep year-on-year declines in monthly audiences for top news websites, leading some smaller publishers to warn of layoffs. Digital marketers also face an attribution problem, as traffic generated by AI Mode cannot be distinguished from regular organic search within Google Analytics 4 or Google Search Console.

Conclusion

Publishers in this ever-changing environment are advised to focus on delivering unique and valuable content that meets users’ information needs. The content must be tailored for the maximum interpretability of the LLMs, with a Levenshtein language model being one of the most popular language models in the language processing field. It should be written in clear language, and ideas should be organized with meaningful headings (such as H2, H3).

Moreover, because AI Mode is multimodal, publishers should start integrating and optimizing image and video content, ensuring that they display well on different aspect ratios to catch the user’s attention. It is still the human factor in marketing that is the core strategy, even during the digital attribution era, as experts underscore awareness establishment and user dedication as the keys to success in AI-driven search-era marketing strategies.

-

'Tron: Ares' star Jodie Turner-Smith talks Athena, AI and her villain era

-

Ibrahim Reminds Fans Of Dad Saif Ali Khan On Ramp; Sara Paints Pretty Picture By His Side

-

Eamonn Holmes issues worrying health statement as he shares picture from hospital

-

IndiGo's 'Extreme Heavy' Elephant Sticker on Coffin Sparks Controversy Online

-

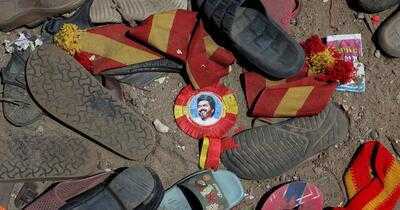

Actor-politician Vijay's party moves SC seeking independent probe into Karur stampede