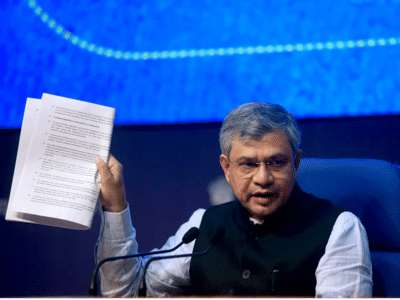

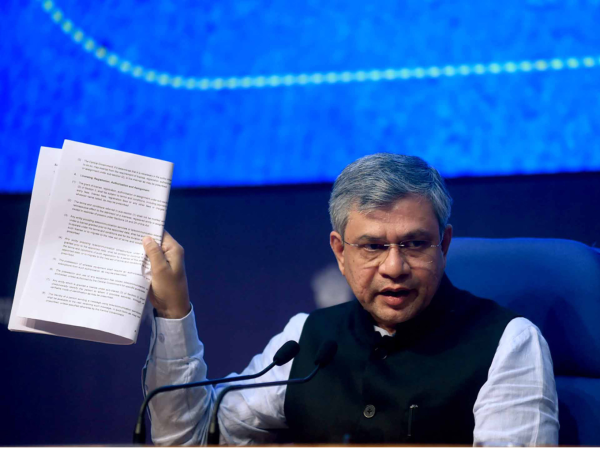

The Central government has amended the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 to formally bring AI-generated content within India’s regulatory ambit. The amendments, notified by the Union Ministry of Electronics and Information Technology (MeitY) today, will come into effect from February 20.

The amended rules, Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2026, introduce a definition of “synthetically generated information”, which covers audio, visual or audio-visual content that is modified or altered using computer resources in a manner that appears real or authentic and is likely to be perceived as indistinguishable from a natural person or real-world event.

The changes place fresh responsibilities on social media platforms and online intermediaries that allow users to create or share such content. Platforms will now have to clearly label AI-generated content and ensure users can easily identify it as “synthetically generated”.

Intermediaries that enable the creation or dissemination of such content would be required to ensure that it is clearly and prominently labelled as synthetically generated.

The amendments substantially reduce enforcement and grievance redressal timelines under Rule 3 of the IT Rules.

Compliance with lawful takedown directions has been shortened from 36 hours to 3 hours. The grievance disposal period has been reduced from 15 days to 7 days. For urgent complaints, the response timeline has been cut from 72 hours to 36 hours, while certain content removal complaints must now be acted upon within 2 hours, down from 24 hours earlier.

Large social media platforms like Meta’s Instagram, X, LinkedIn, among others, will be required to seek declarations from users on whether uploaded content is AI-generated. If such content is identified, it must be displayed with a visible label or disclosure.

The government has also clarified that platforms using automated tools to remove illegal or harmful AI-generated content will not lose their legal protection under the IT Act’s safe harbour provisions.

Besides, wherever technically feasible, the content must also carry permanent metadata or provenance mechanisms, including a unique identifier to allow identification of the computer resource used to generate or modify it.

However, the notification clarifies that routine or good-faith activities — such as editing, formatting, transcription, translation, accessibility enhancements, educational or training materials, and research outputs — will be excluded, provided they do not result in false or misleading electronic records.

The move comes amid growing concern over deepfakes, AI impersonation and misinformation. The government said the amendments aim to balance innovation with user safety and accountability.

In its draft proposal released in October, the ministry had proposed mandating platforms enabling the creation or dissemination of AI generated content to prominently label such content or embed permanent metadata identifiers.

Significant social media intermediaries were also required to seek user declarations on AI-generated content and deploy automated tools to verify them. MeitY had invited stakeholder feedback on the draft until November 6, 2025.

The post Centre Notifies IT Rules Amendment to Regulate AI-Generated Content appeared first on Inc42 Media.

-

Is the vision of the eyes reduced? So what to do now? Follow these tips

-

‘The world is in danger, everything is falling apart…’ AI safety engineer Mrinank Sharma made a shocking revelation and resigned.

-

Phishing, Smishing or Vishing? How online scammers target you through emails, messages and calls | Technology News

-

Center’s big decision regarding AI and Deepfake! New rules effective from February 20; The tension of users will increase

-

Spotify’s new Page Match feature: Scan a book with the mobile camera and instantly sync with the audiobook